TL;DR: We have built cloud enumeration scripts now available @ https://github.com/NotSoSecure/cloud-service-enum/. This script allows pentesters to validate which cloud tokens (API keys, OAuth tokens and more) can access which cloud service.

As cloud environments are becoming increasingly popular, we are seeing a rise in cloud environment usage in production. From the Internet, most of the cloud servers will not look different, however, once you get access to a server, things start to change. Cloud environments use tokens such as API Keys, OAuth tokens or managed identities for identity and access management. These tokens can be obtained by attackers using a variety of techniques such as Server Side Request Forgery (SSRF) attack where a server performs an action on behalf of the attacker and sends the response to attacker or via attacks such as a command injection vulnerability in a cloud hosted app. These tokens can also be leaked by accidental disclosure over code sharing sites such as pastebin or GitHub which can be discovered via OSINT techniques. We have publicly documented such instances Exploiting SSRF in AWS Elastic Beanstalk and Identifying & Exploiting Leaked Azure Storage Keys

In this blog post, Aditya Agrawal will explore some post-exploitation techniques related to AWS, GCP, Azure, especially centered around resource enumeration when such tokens are obtained.

Generally the credentials obtained by means of exploitation can have limitations and could be low privileged. This implies that not all resources are enumerable and not all resources will give you responses like S3 buckets, as we discovered in NotSoSecure AWS Beanstalk Research.

This is why we built a set of tools for 3 top cloud vendors to perform enumeration on these environments and extract as much details as possible. Let’s have a quick look at the three tools we built.

- AWS Service Enumeration

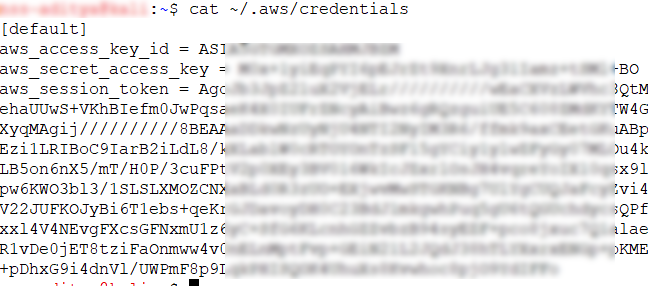

Once IAM credentials are obtained, the first point of call would be to see if the Credentials are working by loading them into AWS CLI.

To confirm if the above credentials are working, we will do a “sts-get-caller-identity” call.

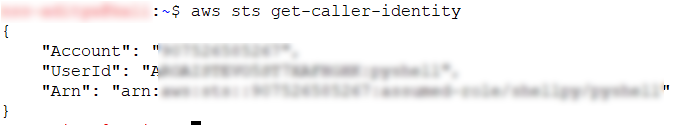

Next, we can enumerate the IAM policies and services such as S3 buckets. Example commands would be,

aws s3 ls

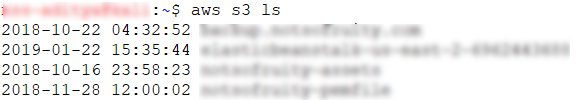

You will realize that AWS is a beast when it comes to the number of services it offers and hence we decided to automate the enumeration of all the resources using “aws_service_enum”

Usage

usage: aws_service_enum.py [-h] --access-key --secret-key

[--session-token] [--region]

[--region-all] [--verbose] [--s3-enumeration]

[--logs]

< AWS_SERVICE_ENUM Says "Hello, world!" >

---------------

\ ^__^

\ (oo)\_______

(__)\ )\/

||----w |

|| ||

required arguments:

--access-key AWS Access Key ID

--secret-key AWS Secret Key

optional arguments:

-h, --help show this help message and exit

--session-token AWS Security Token. Required if provided credentials do not have get-session-token access

--region Enter any value from given list

ap-northeast-1, ap-northeast-2, ap-northeast-3, ap-southeast-1, ap-southeast-2, ap-south-1

ca-central-1

eu-central-1, eu-west-1, eu-west-2, eu-west-3, eu-north-1

us-east-1, us-east-2, us-west-1, us-west-2

sa-east-1

--region-all Enumerate for all regions given above

--verbose Select for Verbose output

--s3-enumeration Enumerate possible S3 buckets associated with services like ElasticBeanstalk, Athena

--logs Create a log File in same directoryMost of the options are pretty self-explanatory, however, I would like to draw your attention towards the following 3 options:

–region this will allow you to specify a default region. If no region is selected it will enumerate over all regions.

–s3-enumeration is a quite interesting feature here. In our earlier research on AWS Beanstalk we discovered that AWS by default uses naming patterns while creating a bucket.

For example, if you create a “Elastic Beanstalk” service then AWS will create a bucket like elasticbeanstalk-REGIONNAME-ACCOUNTID, where REGIONNAME is region of elastic beanstalk and ACCOUNTID is account id of the role.

–s3-enumeration will list all buckets to which the discovered services has access to but are not accessible directly. For example, if you discovered elastic beanstalk credentials through SSRF, and if you use the same credentials to do aws s3 ls, it will not list the associated buckets to service. But if you use –s3-enumeration, it will try to guess the bucket and if there is a bucket, it will list (only list) the content of the bucket.

Example output of tool given below:

- GCP Service Enumeration

GCP uses the concept of service accounts. These service accounts provide temporary access tokens (also known as OAuth Access Tokens) via metadata API (in case of compute or cloud function instances). We will be focusing on what to do once an “OAuth access token” is obtained.

Currently services associated with “OAuth access token” can only be determined through REST API call to https://developers.google.com/identity/protocols/googlescopes or through python interface to above APIs https://github.com/googleapis/google-api-python-client.

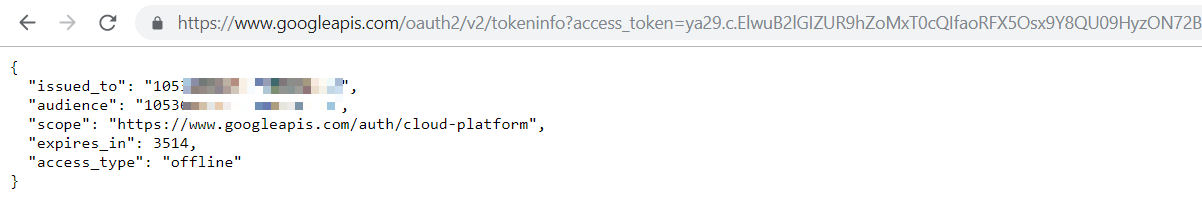

First point of call would be to see the scope and other details of “OAuth access token” through https://www.googleapis.com/oauth2/v2/tokeninfo?access_token={insert_token_here} endpoint.

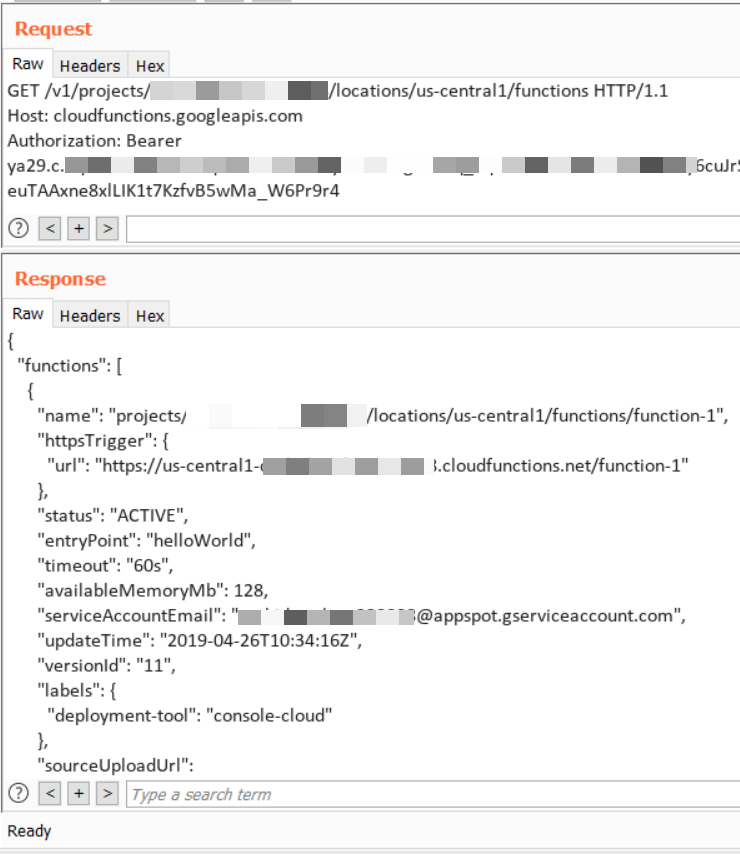

Depending upon the scope, list of services can be accessed through REST API interface. For example, as shown below we have used “OAuth access token” from an intentionally vulnerable instance to fetch list of "Cloud Functions" in "us-central1" region.

This tedious task is automated using our “gcp_service_enum” tool.

Usage

usage: gcp_service_enum.py [-h] --access-token [--region] [--project-id] [--verbose]

[--logs]

< GCP_SERVICE_ENUM Says "Hello, world!" >

---------------

\ ^__^

\ (oo)\_______

(__)\ )\/

||----w |

|| ||

required arguments:

--access-token GCP oauth Access Token

optional arguments:

-h, --help show this help message and exit

--region Enter any value from given list

ap-northeast-1, ap-northeast-2, ap-northeast-3, ap-southeast-1, ap-southeast-2, ap-south-1, ca-central-1

eu-central-1, eu-west-1, eu-west-2, eu-west-3, eu-north-1

us-east-1, us-east-2, us-west-1, us-west-2

sa-east-1

--project-id ProjectID

--verbose Select for Verbose output

--logs Create a log File in same directoryExample output of tool given below:

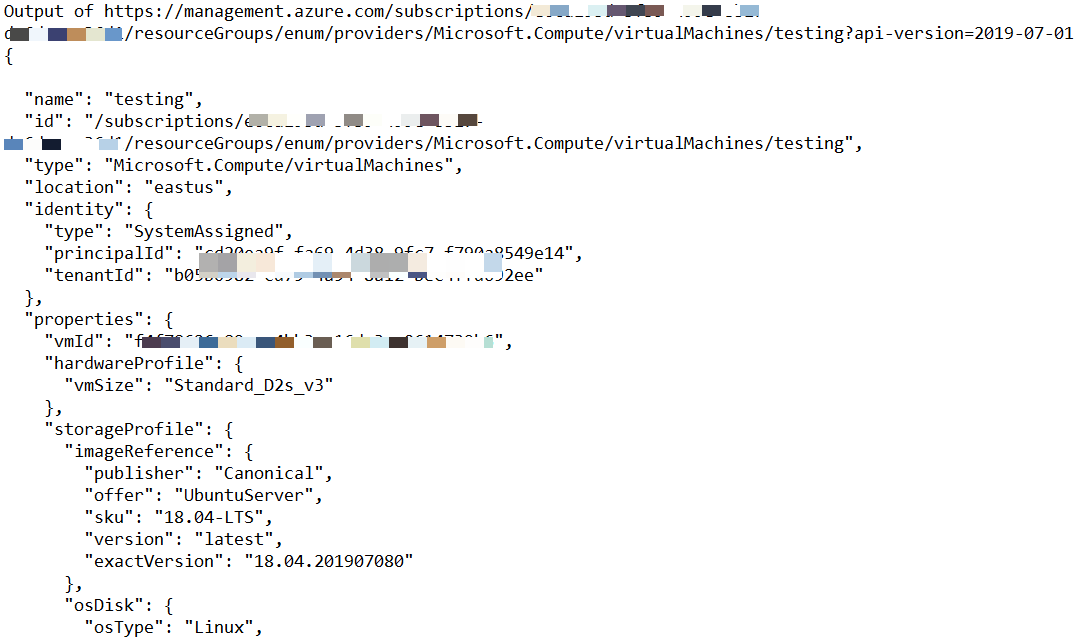

- Azure Service Enumeration

Azure Managed Identity feature provides Azure services with an automatically managed identity in Azure AD. You can use the identity to authenticate to any service that supports Azure AD authentication, including Key Vault, without any credentials in your code.

Temporary access token can be generated using Managed Identities via Azure Instance Metadata Service (IMDS) endpoint. We will be focusing on what to do once an “access token” is obtained.

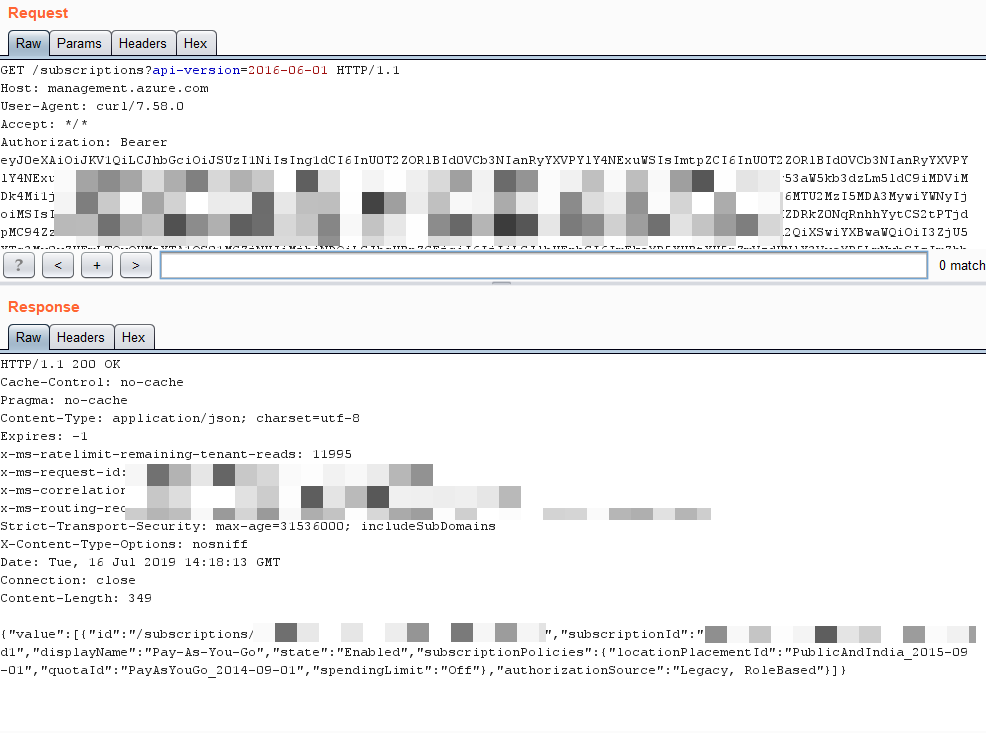

First point of call would be to see the list of subscriptions associated with access token through https://docs.microsoft.com/en-us/rest/api/resources/subscriptions/list.

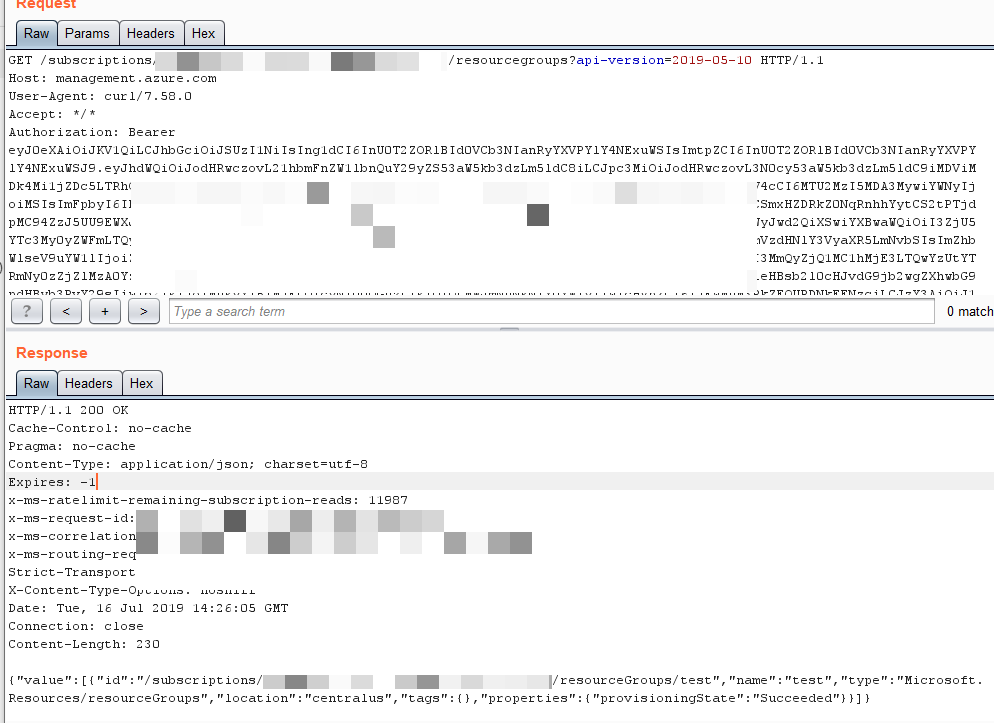

All services listed over https://docs.microsoft.com/en-us/rest/api/azure/ can then be used to fetch/modify information/resource by providing “subscriptionId” and “access token”.

These calls are automated using our “azure_service_enum” tool.

Usage

> python azure_service_enum.py

usage: azure_enum.py [-h] --access-token [--logs]

<Azure_SERVICE_ENUM Says "Hello, world!" >

---------------

\ ^__^

\ (oo)\_______

(__)\ )\/

||----w |

|| ||

required arguments:

--access-token Azure Managed Identities Access Token

optional arguments:

-h, --help show this help message and exit

--logs Create a log File in same directorySample Azure enum output

These tools are built to extract information from partially accessible credentials or low privileged credentials focusing primarily on pentester’s need to identify resources accessible to stolen credentials.

These tools and a plethora of other tools, techniques and various attack defence scenario’s are covered in our hacking and securing cloud class Hacking and securing cloud infrastructure training

If you and your team is performing cloud related security operations, be it pentesting or migrating your own infra to cloud or setting up a fresh cloud environment this is a course that will give you a holistic picture of cloud security. We also offer consultancy services for auditing and hardening your cloud infrastructure.