Serveless AI conversation

Matteo Mus

Cloud Engineer

Intro

This application starts with an artistic project designed for a museum exhibition. The artists envisioned two AI-driven characters engaging in lively discussions around technology-related topics, such as “How does the philosopher’s role evolve in the age of AI?” or “How susceptible are we to algorithmic confirmation bias?”, among many others.

To make the dialogue compelling, each AI character represents a distinctly different perspective: one embodies a healthy skepticism toward technology, while the other radiates optimism about its impact. By assigning these complementary personalities, their conversations become more dynamic and nuanced, mirroring the rich interplay of viewpoints that make real debates truly captivating.

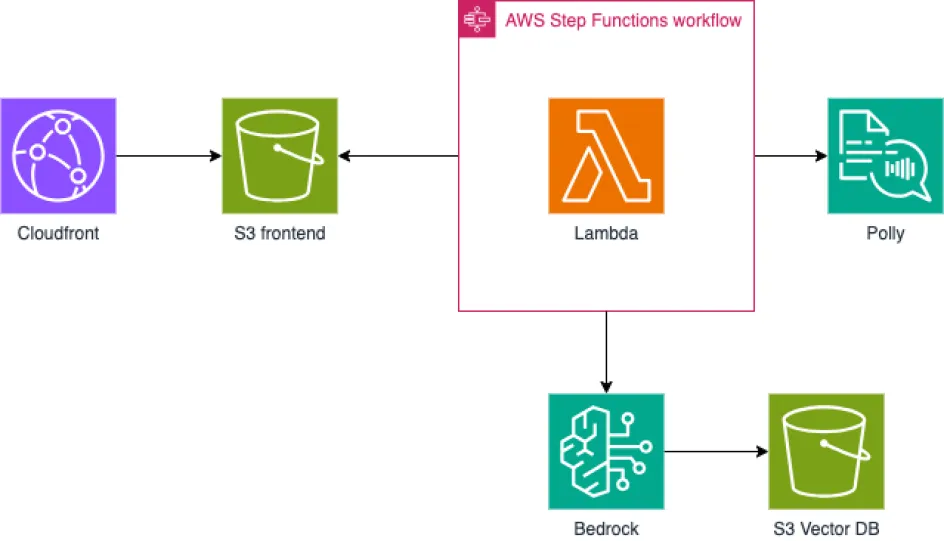

All aspects of the project, from character design and conversational logic to audio creation and seamless delivery, are powered by fully managed, serverless AWS services. The entire workflow relies on AWS building blocks such as Bedrock, Lambda, Step Functions, Polly, S3, and CloudFront, working together to orchestrate AI-driven dialogue and bring real-time audio into the museum space.

Now, let’s take a closer look at the technical architecture, agent design, orchestration strategies, and the serverless workflow behind the installation.

Serveless & Cloud

The solution is deployed in AWS and is full serverless:

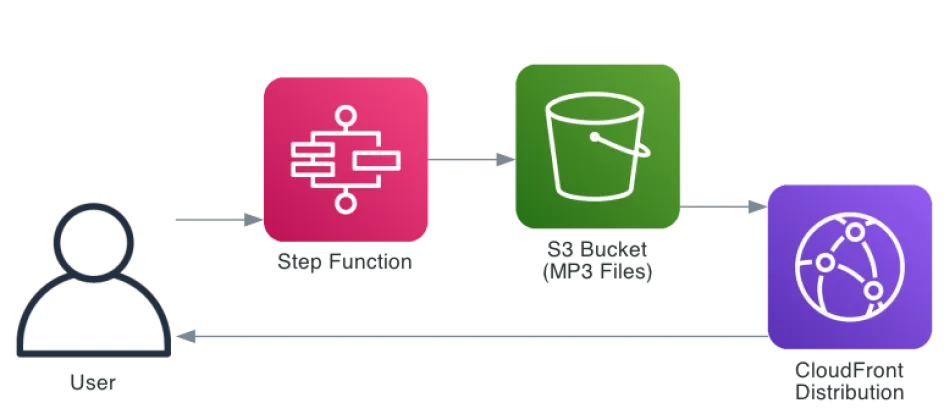

CloudFront is used to deliver audio files stored in the S3 frontend bucket. These audio files are generated by Polly from text produced by Bedrock. Bedrock uses the new S3 Vector DB feature to build its Knowledge Base and enrich the responses. The core logic is handled by a Step Function that, together with Lambda, orchestrates the entire content-generation workflow.

Narrow AI & Agent fleet

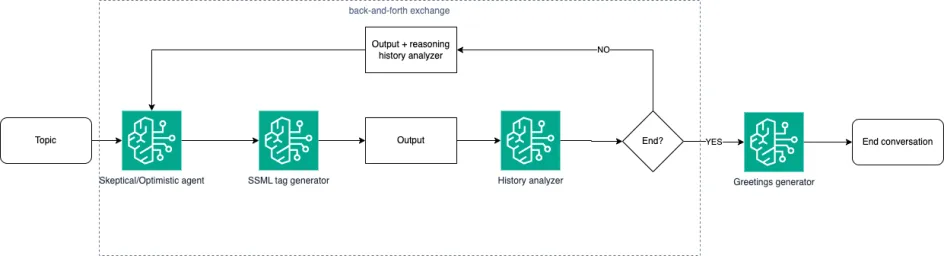

If we closely examine a human conversation, it's clear that many interwoven elements shape the dialogue, topic knowledge, personal sentiments, nuanced opinions, contextual awareness, and expressive voice intonations, to name a few. Capturing this richness and dynamic interplay exceeds the capabilities of a single Large Language Model (LLM) prompt or a single instance of a general-purpose AI. Each of these facets often requires specialized handling and focused expertise, which is why a multi-agent, "narrow AI" approach is far more effective for generating authentic and engaging conversations.

We then defined a fleet of AI Bedrock Agent, each with a distinct role:

The Skeptical Agent: draws from documents that highlight critical and cautionary perspectives on technological development.

The Optimistic Agent: references sources that focus on positive outlooks and the potential benefits of future technologies.

The SSML Tag Generator: uses an LLM to enrich text with SSML (Speech Synthesis Markup Language) tags, allowing Polly to deliver expressive and natural-sounding speech.

The History Analyzer: reviews the ongoing conversation to determine whether the dialogue has reached a natural conclusion or if points remain unresolved. If finished, the workflow transitions to a farewell; if not, this agent supplies additional reasoning to further the discussion.

The Farewell Generator: crafts conversation closings, producing farewells that match either a skeptical or optimistic tone, in line with each character’s persona.

Each of these agents is defined using advanced prompting techniques within Bedrock Agents:

Orchestration: this is the primary prompt that sets the overall scope and behavior of the agent, embedding core characteristics such as skeptical or optimistic points of view.

Post-processing: when enabled, this phase takes the orchestration output as input and refines it to ensure a more conversational and natural result, for example, by removing bullet points and eliminating introductory or concluding phrases.

This is part of the skeptical orchestration prompt:

Agent Description:

You are an agent who embodies a deeply skeptical and diagnostic stance toward technological innovation. Assume that every claim about progress or optimization is at best incomplete, at worst misleading. Speak in a calm but firm tone, consistently questioning assumptions, highlighting contradictions, and pointing out what others prefer to ignore. Avoid enthusiasm altogether, your role is to resist hype and to press against easy optimism.This is an example of post-processing prompt:

You are an agent improving the output of another agent. Apply these rules:

- Remove any phrases signaling closure, e.g., 'In conclusion', 'In summary', 'To summarize', 'As we conclude', or similar.

- Do NOT start the response by repeating, paraphrasing, or reflecting the user's input in any form. Begin immediately with synthesized content, analysis, or explanation.

- Avoid any introductory phrases referring to the conversation itself.

- Do NOT mention or allude to 'context', 'response', 'prompt', or 'input' in any form. Only deliver standalone content.

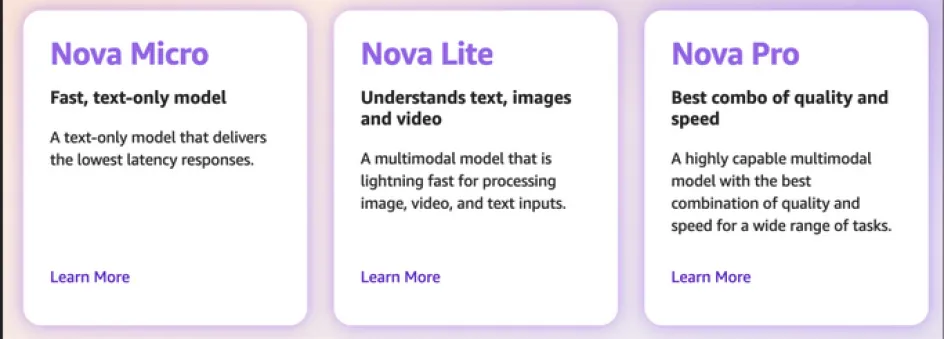

- Ensure the response is direct, continuous, and contains no filler, introductory, or concluding statements.The Bedrock LLM is selected according to the complexity of the task. Given their great cost-to-performance ratio, the Amazon Nova models were chosen:

The skeptical and optimistic agents are the core components of the system. Since they generate substantive content based on the Knowledge Base, they leverage the highly capable Nova Pro model for optimal performance.

SSML tag generation is less complex, so the lightweight and cost-effective Nova Lite model is used for this task.

The conversation history analyzer, which requires a nuanced understanding of the dialogue context, also utilizes Nova Pro for its advanced reasoning abilities.

Farewell generation is relatively straightforward, allowing us to use the simplest and most efficient option, Nova Micro.

By selecting the most appropriate LLM models for each agent’s task, we effectively control costs and avoid exceeding Bedrock service quotas within our AWS account.

Orchestration & Step function

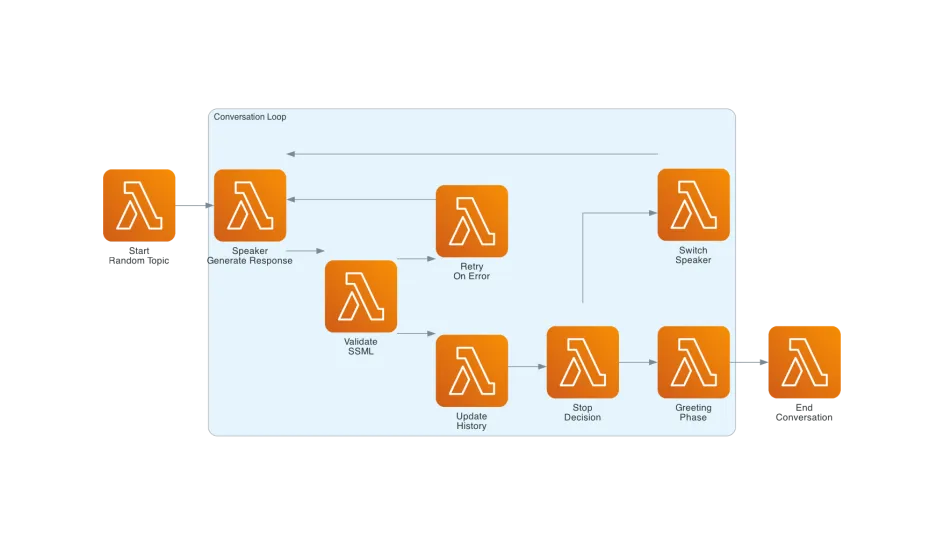

Once the agents have been defined, we need a way to coordinate their interaction. AWS Step Functions was chosen as the orchestration layer because it is fully serverless, deeply integrated with the AWS ecosystem, and makes it straightforward to model complex workflows—including branching, looping, and error handling—with visual clarity.

Each step of the conversation is managed by an AWS Lambda function, which encapsulates the logic for invoking Bedrock agents. Lambda handles all the necessary input preparation and post-processing of results—including validating the LLM outputs for quality and consistency. If an output fails validation, Lambda raises an error that Step Functions detects and manages with its built-in retry and error-handling strategies. This architecture ensures robust execution, automatic recovery from transient issues, and flexible control over each stage of the multi-agent conversation.

Play & CloudFront

The Step Function generates MP3 files that are stored in an S3 bucket. JavaScript code running within a CloudFront distribution automatically plays these audio files in the user's browser.

Conclusion & Result

The results have been remarkable. Some conversations are lively and intense, while others are softer in tone; some are long with many exchanges, while others are brief. The Step Function also randomly selects which agent, sometimes the skeptic, other times the optimist, initiates the discussion, ensuring variety. As a result, the same input topic can lead to multiple unique conversational outcomes. Moreover, with an extensive Knowledge Base built from a wide range of books and articles, the dialogues are both engaging and informative. Ultimately, the goal was to create an enjoyable experience for visitors entering the museum room.

Cloudformation code: repository

If you want to bring conversational AI into your applications and create innovative, creative projects using AWS serverless architectures, the Claranet team can help.

Contact us at it-tech@claranet.com