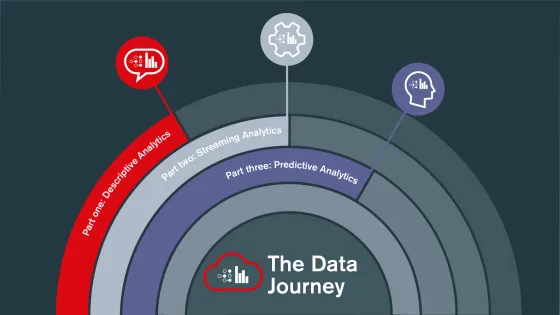

The data journey - part three: predictive analytics

In this third post of a four-part series we look at how we can project our analysis into the future.

Every business has data. Some businesses can spin gold from their data but others merely collect it in the hopes of getting value someday. While there is no easy path to maximising the value of your data, there is at least a clear journey.

In our previous posts we mastered our business past and now we can look to the future with predictive analytics. Here we are more concerned with what will happen given what has happened before. It is here that we find machine learning comes to the fore but it's worth noting that it's not the only technique. Ultimately, we are constructing a predictive model from which we can make inferences that influence our decision making.

To make the most accurate model we typically need as much data as we can possibly get. If you think back to your high school statistics, our data represents a sample of the entire population we're interested in. The larger the sample the more likely we are to have seen every possibility and the less likely we'll encounter a hitherto unseen Black Swan.

Looking back at our Data Warehouse there exists two problems; we've only extracted a subset of our data (and often only structured data) and we've moulded it to conform to a particular model. What of the rest of the data? What if we've not modelled something in our business that is actually highly predictive? We need a different way to store and access our data: the Data Lake.

At its simplest a Data Lake is an object store where we can put all our data, structured or unstructured. What we don’t do is manipulate the data to conform to any pre-existing model, we are concerned only with storage. A lot of work can go into making an optimal Data Lake and this shouldn't be underestimated. Just because the data is there doesn't mean you can query it without reading everything to find the records of interest. A poorly designed Data Lake quickly becomes cost prohibitive if you're scanning petabytes of data every single query.

The real power of the Data Lake is the ability to model-on-demand. Having the data stored as raw as possible, we are free to explore as many models as we desire. Contrast this with the model-first approach of a Data Warehouse where the slightest change can take hours or days to manifest. You can see the time saving this ability to rapidly experiment brings without having to re-extract, re-transform and re-load all our data from the source systems.

So, what value does predictive analytics bring? Let me give two examples, one that increases value and one that reduces costs, both from retail. The purchase history of all our customers allows us to build product demand forecast models. Knowing when certain products are more likely to sell and in what quantities allows us to purchase from our suppliers in a more timely manner meaning less unsold inventory cluttering the warehouses and storerooms.

The purchase history of individual customers will allow us to predict the likelihood of churn. Knowing exactly when to send a waning customer a discount voucher for their favourite product has the potential to win that customer back and equally knowing that a different customer is still loyal saves you from offering a needless discount.

Once you have a well-trained predictive model running happily in production you'll soon create more. As you better model your business you will develop a very powerful capability, the ability to experiment with the models and measure the impact. We'll explore this in our final post when we look at prescriptive analytics.